I’m an AI Scientist at UL Solutions, where I design and apply advanced machine learning techniques to build safe,

transparent, and reliable AI systems for real-world applications.

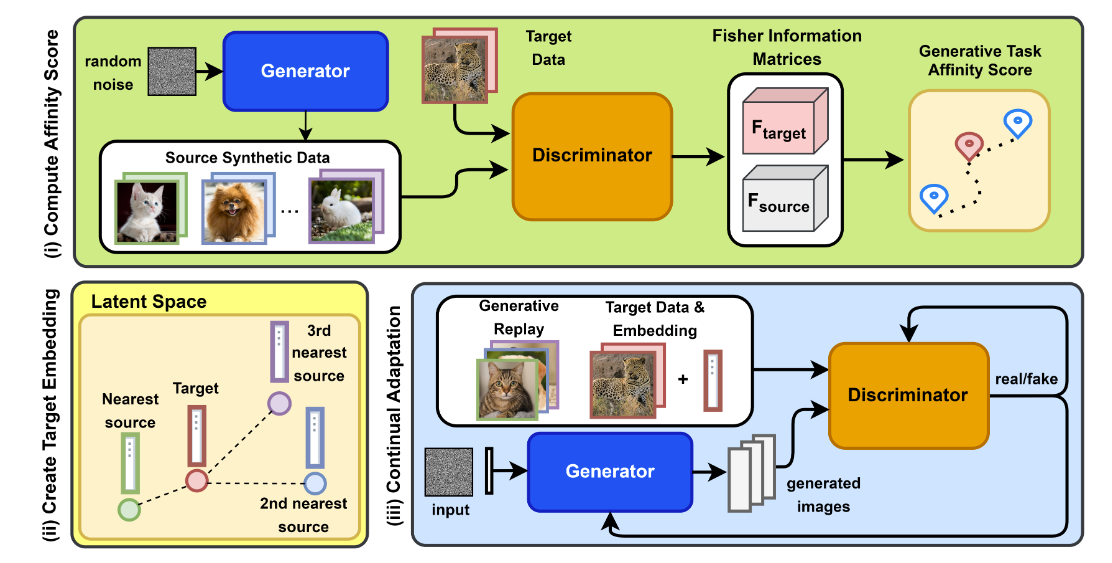

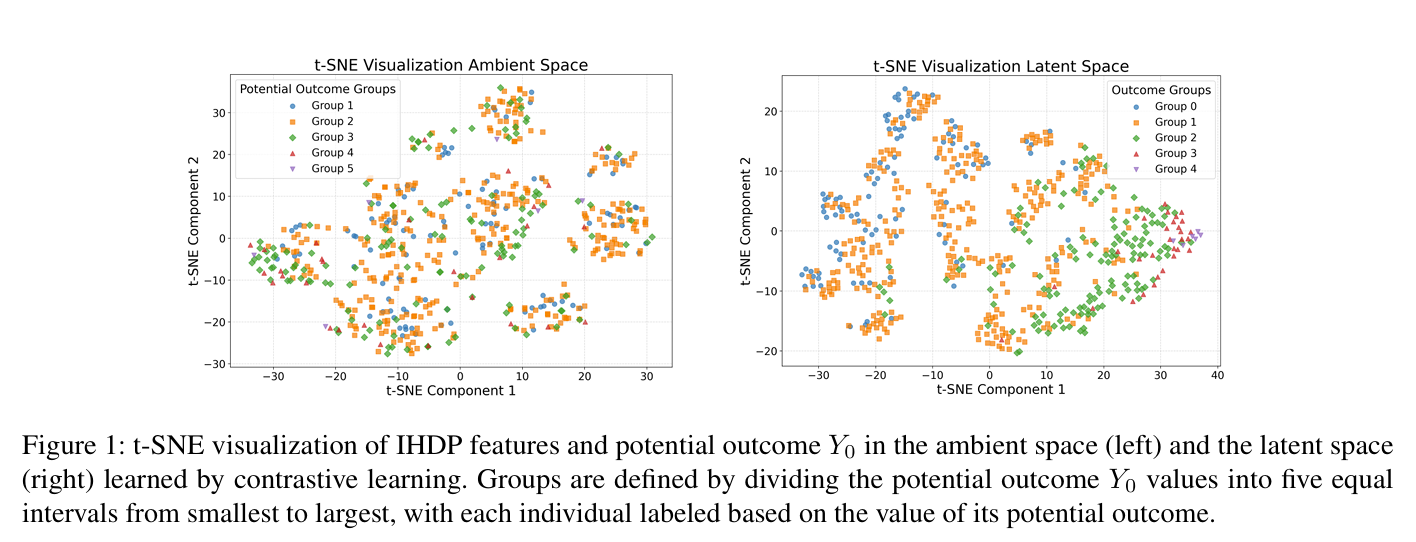

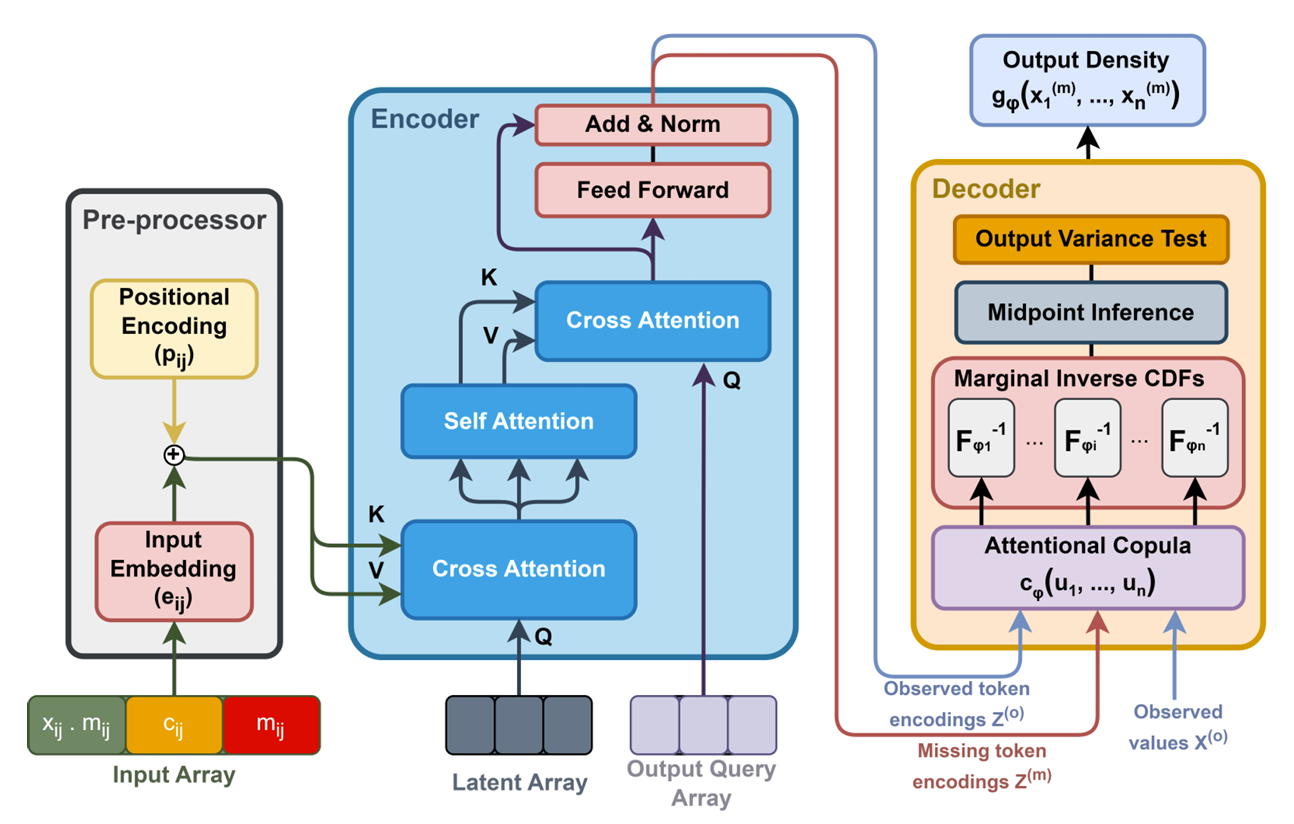

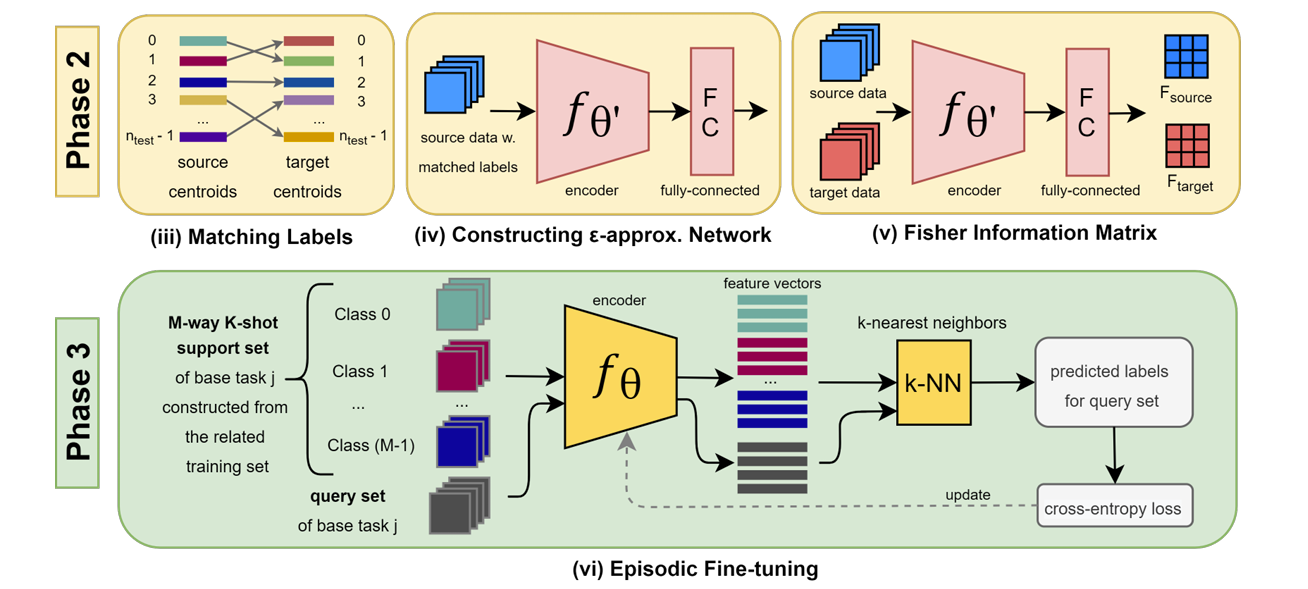

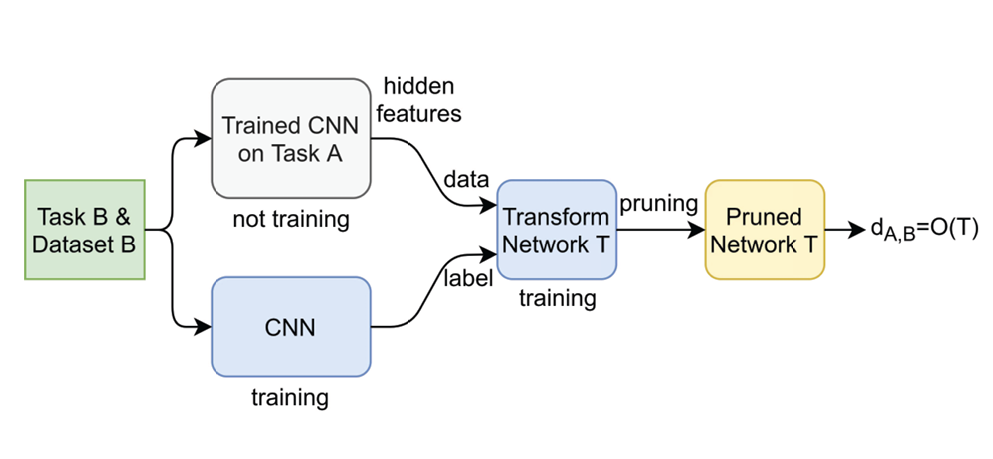

I earned my Ph.D. in Machine Learning from Duke University, where I explored Task Affinity and its applications

in Machine Learning under the guidance of Dr. Vahid Tarokh. Before that, I completed my M.S. in Electrical Engineering at the

California Institute of Technology (Caltech) and my B.S. in Electrical and Computer Engineering at Rutgers University,

graduating Summa Cum Laude.

I’m passionate about bridging the gap between cutting-edge AI research and practical safety applications,

ensuring that intelligent systems not only perform well—but do so responsibly.

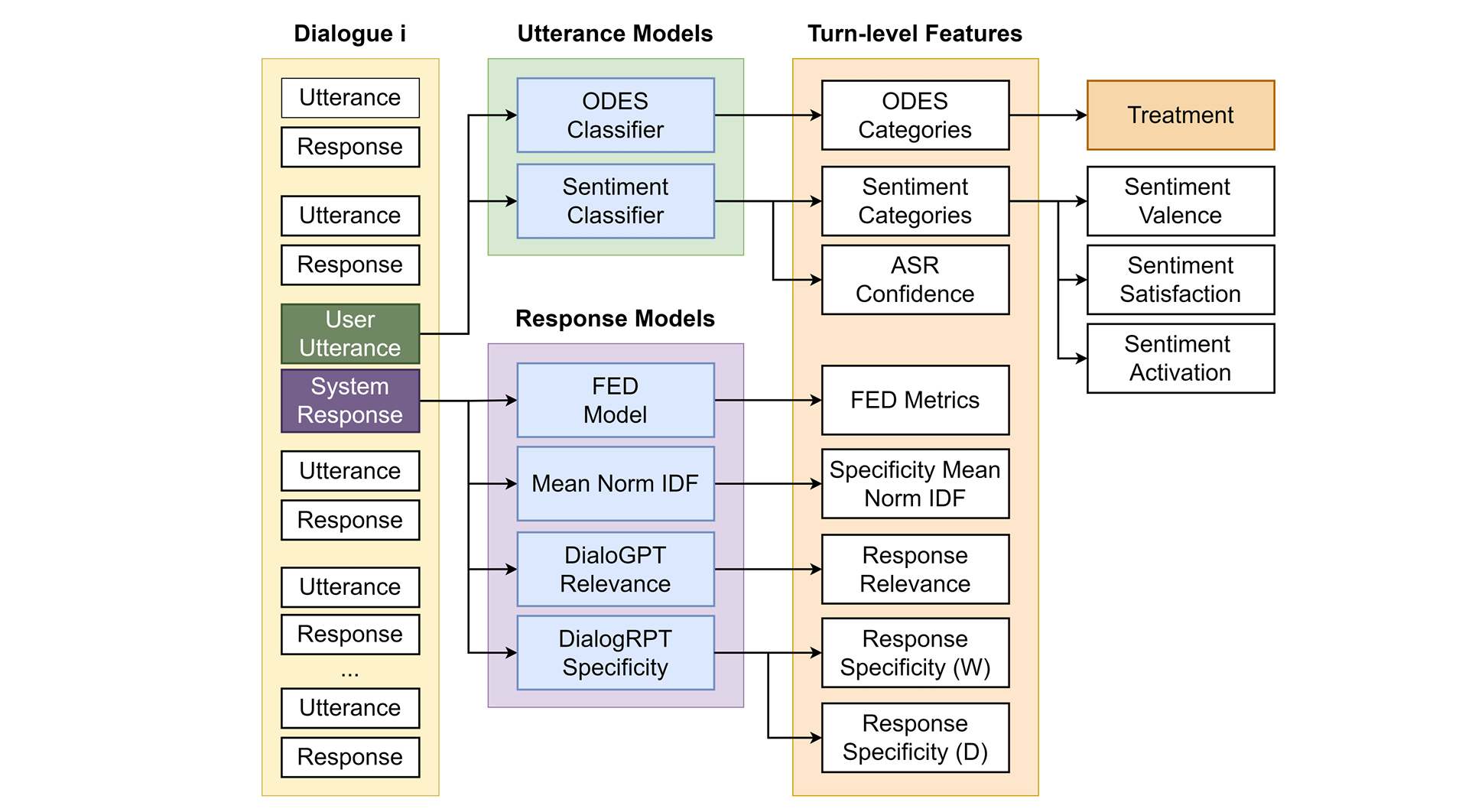

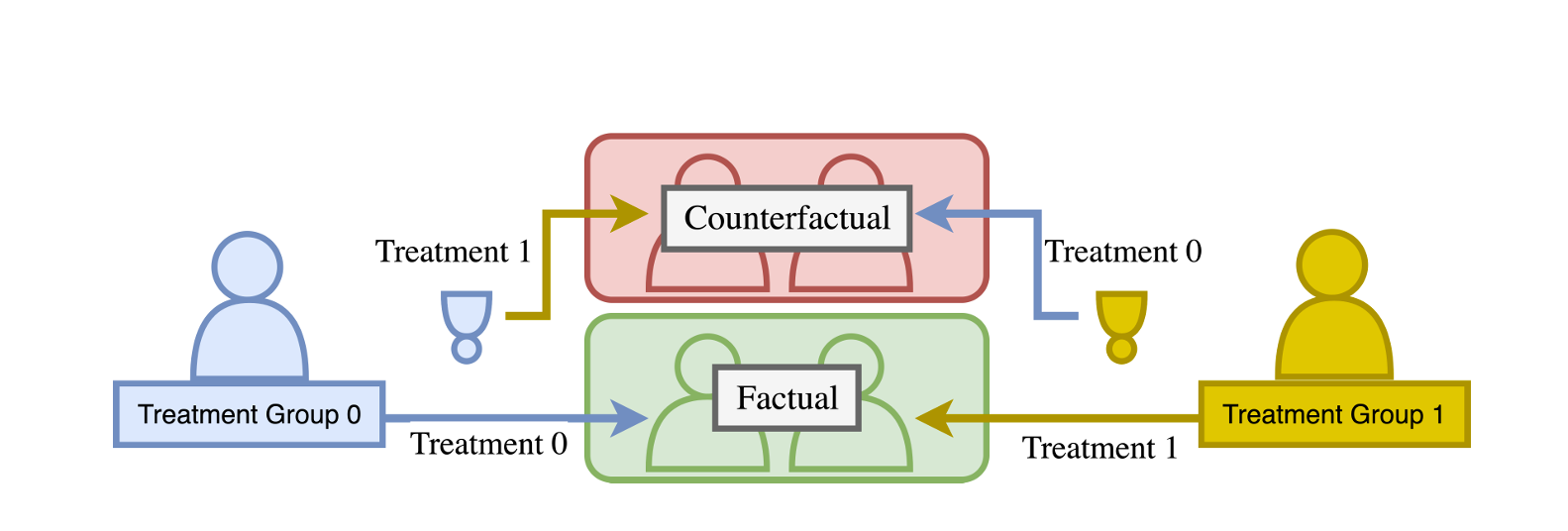

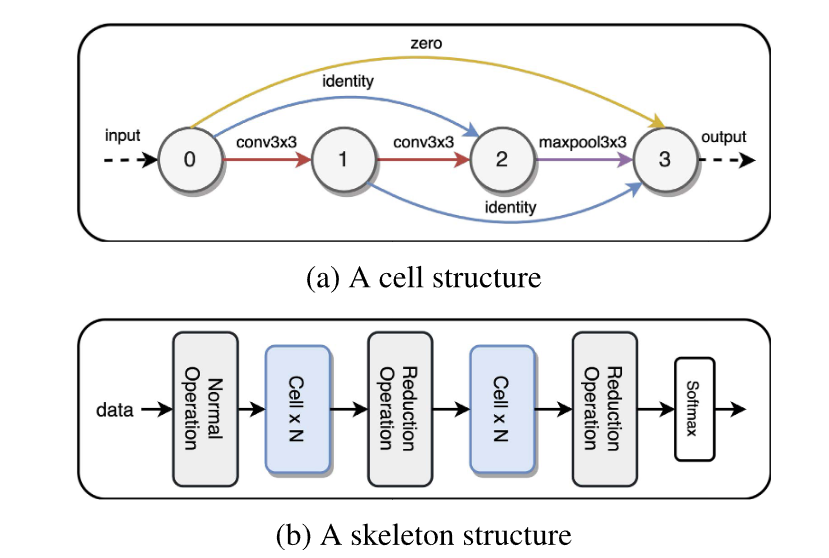

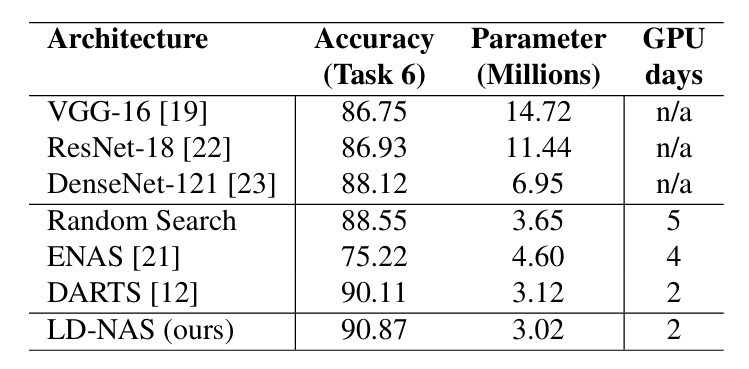

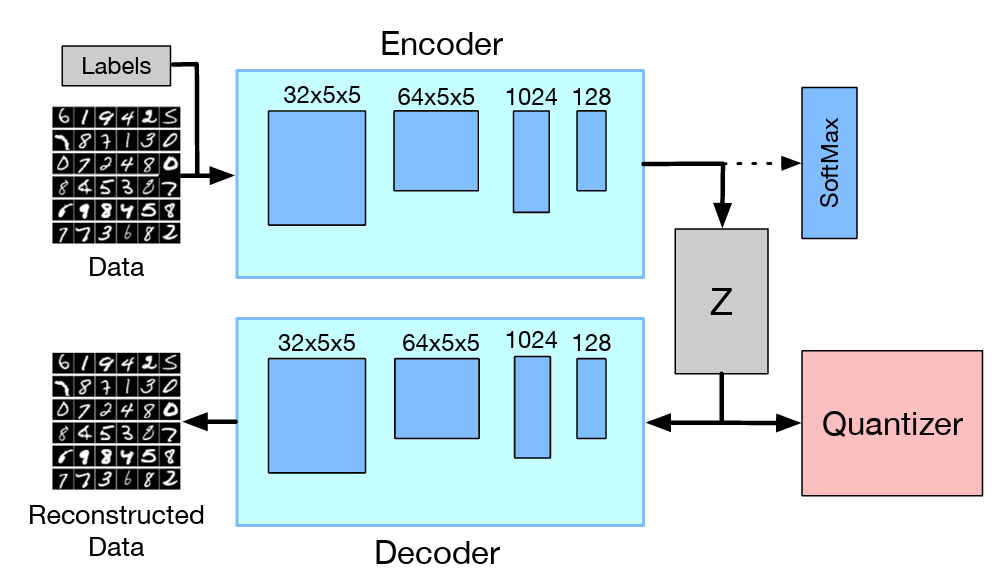

My research bridges Machine Learning Algorithms, Computer Vision, and Natural Language Processing, with a focus on Transfer Learning, Few-Shot Learning,

Multi-Task Learning, and Neural Architecture Search.

I’ve been honored with distinctions such as the Matthew Leydt Society, John B. Smith Award, and Nikola Tesla Scholar,

and I’m an active member of Tau Beta Pi, Eta Kappa Nu, and Sigma Alpha Pi, reflecting my dedication to excellence and innovation

in engineering and research.