Improving Open-Domain Dialogue Evaluation with a Causal Inference Model

Abstract

Effective evaluation methods remain a significant challenge for research on open-domain conversational dialogue systems. Explicit satisfaction ratings can be elicited from users, but users often do not provide ratings when asked, and those they give can be highly subjective. Post-hoc ratings by experts are an alternative, but these can be both expensive and complex to collect. Here, we explore the creation of automated methods for predicting both expert and user ratings of open-domain dialogues. We compare four different approaches. First, we train a baseline model using an end-to-end transformer to predict ratings directly from the raw dialogue text. The other three methods are variants of a two-stage approach in which we first extract interpretable features at the turn level that capture, among other aspects, user dialogue behaviors indicating contradiction, repetition, disinterest, compliments, or criticism. We project these features to the dialogue level and train a dialogue-level MLP regression model, a dialogue-level LSTM, and a novel causal inference model called counterfactual-LSTM (CF-LSTM) to predict ratings. The proposed CF-LSTM is a sequential model over turn-level features which predicts ratings using multiple regressors depending on hypotheses derived from the turn-level features. As a causal inference model, CF-LSTM aims to learn the underlying causes of a specific event, such as a low rating. We also bin the user ratings and perform classification experiments with all four models. In evaluation experiments on conversational data from the Alexa Prize SocialBot, we show that the CF-LSTM achieves the best performance for predicting dialogue ratings and classification.

TL;DR: The paper introduces CF-LSTM, a causal inference model that predicts dialogue ratings by modeling user behaviors and counterfactual outcomes, outperforming traditional models in both regression and classification tasks. By leveraging interpretable features and treatment-based reasoning, it enables scalable and robust evaluation of open-domain dialogue systems.

Motivation

Evaluating open-domain dialogue systems is challenging due to:

- Sparse and subjective user ratings

- Expensive expert annotations

- Automated metrics lacking nuance

The paper introduces a causal inference model to improve automated evaluation of dialogue systems.

Models Compared

- Transformer Baseline: End-to-end model using raw text.

- Dialogue-Level MLP: Aggregates turn-level features into dialogue-level inputs for regression.

- Dialogue-Level LSTM: Uses LSTM to model sequential turn-level features.

- Counterfactual LSTM (CF-LSTM): Novel causal model using treatment-based regressors and counterfactual reasoning.

Feature Engineering

Turn-level features include:

- ODES Classifier: Detects user behaviors (e.g., insult, compliment)

- Sentiment Analysis: Valence, satisfaction, activation

- DialogRPT & FED Metrics: Relevance, specificity, fluency

- ASR Confidence Scores: From speech recognition

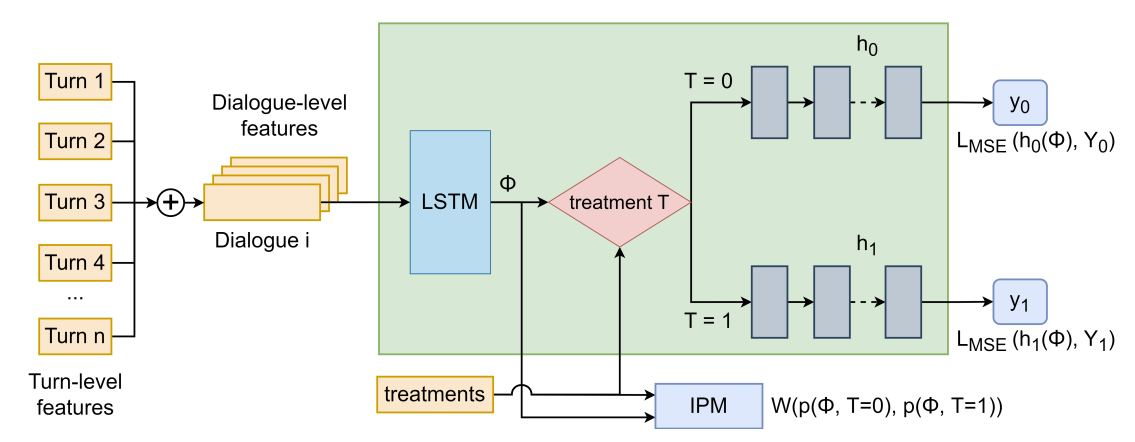

The overview architecture of the proposed CF-LSTM model.

The overview architecture of the proposed CF-LSTM model.

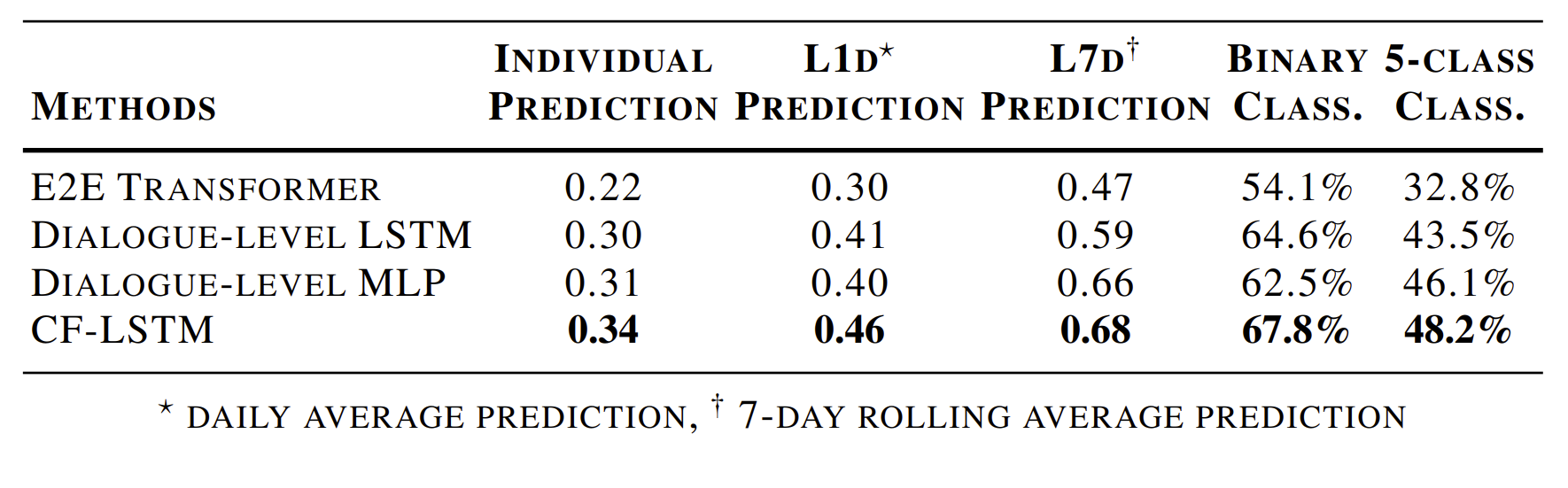

The comparisons between open-dialogue evaluation methods for the regression problem

in terms of Pearson correlation (e.g., individual, L1d, L7d predictions) and the classification problem (e.g., binary, 5-class accuracy)

in terms of prediction accuracy, on SocialBot conversations.

The comparisons between open-dialogue evaluation methods for the regression problem

in terms of Pearson correlation (e.g., individual, L1d, L7d predictions) and the classification problem (e.g., binary, 5-class accuracy)

in terms of prediction accuracy, on SocialBot conversations.

Causal Inference Framework

- Uses treatment assignment based on ODES signals

- Assumes observed confounders via extracted features

- Trains separate regressors for treated vs. untreated dialogues

- Loss function includes Integral Probability Metric (IPM) to reduce bias

Experiments & Results

Using Alexa Prize SocialBot data, the models were evaluated on:

- Regression: Predicting continuous ratings

- Classification: Binary and 5-class rating prediction

Key Contributions

- Introduces CF-LSTM: a causal model for dialogue evaluation

- Combines linguistic, acoustic, and behavioral features

- Improves prediction accuracy and interpretability

- Scales to large datasets for automated evaluation

Citation

@article{le2023improving,

title={Improving open-domain dialogue evaluation with a causal inference model},

author={Le, Cat P and Dai, Luke and Johnston, Michael and Liu, Yang and Walker, Marilyn and Ghanadan, Reza},

journal={arXiv preprint arXiv:2301.13372},

year={2023}

}